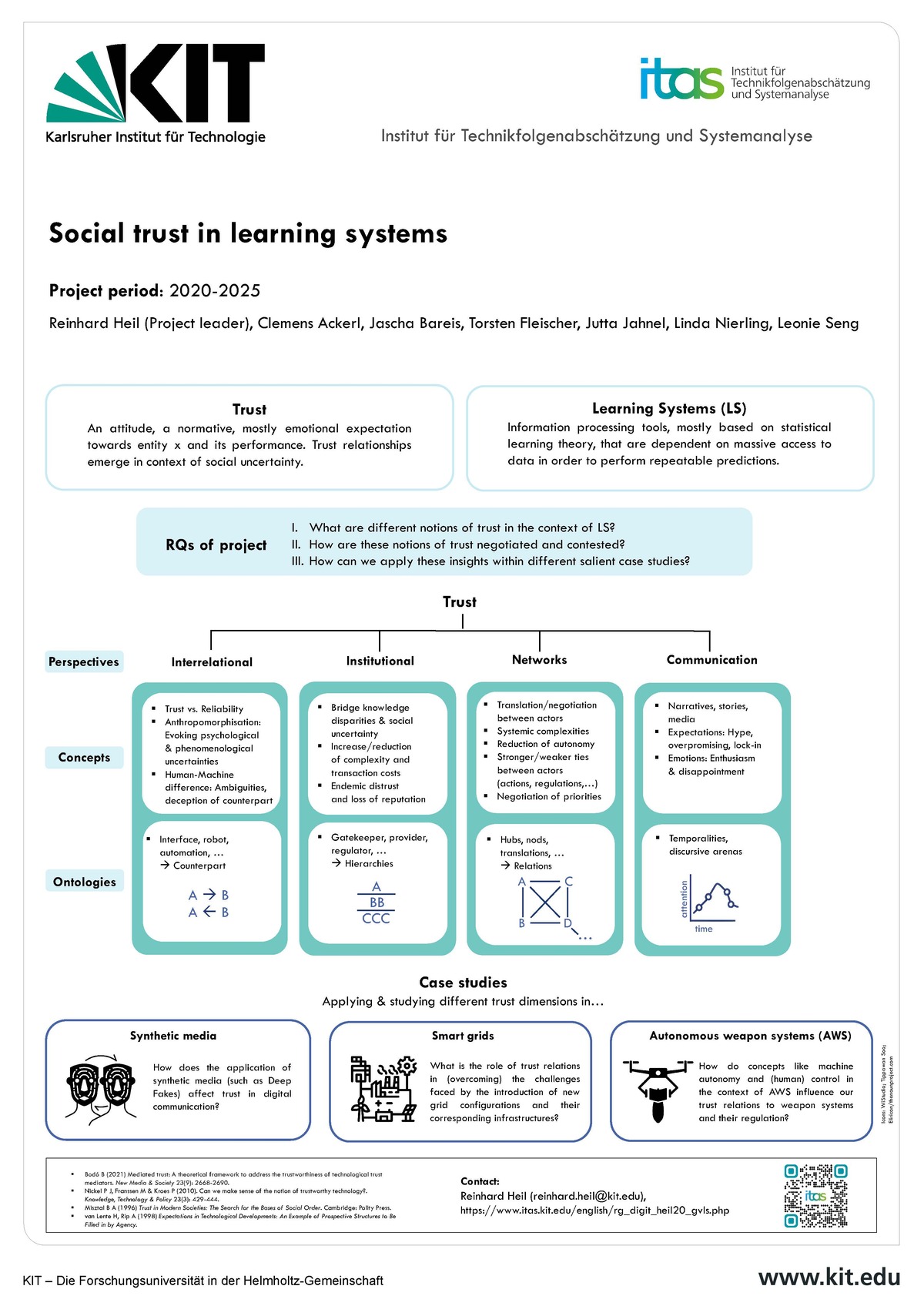

Social trust in learning systems

- Project team:

Heil, Reinhard (Project leader); Jutta Jahnel, Torsten Fleischer, Linda Nierling, Jascha Bareis, Clemens Ackerl, Christian Wadephul (2020-2021), Pascal Vetter (2020-2022), Leonie Seng (2022-2023), Elena Fantino

- Start date:

2020

- End date:

2025

- Research group:

Project description

1st project phase (2020-2022)

Within the framework of the basic-funded in-house research, the project addresses research questions that contribute in the context of program-oriented funding to the profile development of ITAS in the Helmholtz Research Field Information in the thematic area “Learning Systems”.

The research focuses primarily on the development and framing of a context-specific understanding of processes, methods, and consequences of learning systems as “enabling technologies” and also addresses possibilities for a responsible handling of potential risks in various fields of application. Since the overarching problem of trust in information systems is also affected in automated applications and decision-making systems, research will focus in particular on relevant questions concerning societal trust in learning systems. This will build on the project members’ previous and parallel work on risk research, autonomous driving, automation of the world of work, adversarial AI, explainable AI, and the philosophy of science in computational sciences, which will be further developed or made fruitful for application within the thematic field. One of the project’s important tasks is to bring together the previously unconnected work on this topic at ITAS and to develop a common perspective. This also includes organizing the transfer of knowledge across project and research groups and intensifying the cooperation with KIT computer science institutes.

2nd project phase (2023-2025)

During the first project phase, the assumption was confirmed that the project focus on societal trust in learning systems is an overarching topic that is increasingly gaining societal (and political) relevance. Trust is understood as an important resource not only in the AI discourse, but in the digitalization discourse in general.

In the second project phase, the conceptual work will be continued and further specified. The focus will be on:

- Trust in regulatory institutions and its interrelation with trust in AI: trust in AI must be understood as a socio-technical phenomenon that is influenced by multiple actors and political dynamics across technologies. These include, in particular, current efforts to regulate AI at the EU level. However, this process still lacks conceptual acuity and empirical knowledge.

- The relevance of trust in dealing with mis- and disinformation and its impact on the formation of societal and political opinion: artificial intelligence already plays an important role in this regard and will become even more important.

- The role of trust in AI-driven automation processes: With regard to the high context specificity of artificial intelligence, constellations of trust in AI will be examined in selected contexts in which a great potential for social change can be expected, e.g., the world of work, mobility, energy.

Publications

Structuring different manifestations of misinformation for better policy development using a decision tree‐based approach

2025. Policy & Internet, 17 (2). doi:10.1002/poi3.420

Generative KI und Demokratie

2025. Österreichische Akademie der Wissenschaften (ÖAW). doi:10.5445/IR/1000180085

The trustification of AI: Disclosing the bridging pillars that tie trust and AI together

2025, May 20. Conference: "Trust in the Age of AI", Hungarian Academy of Sciences, Veszprém Regional Committee (2025), Online, May 20, 2025

Sprechende Maschinen

2025. "(Wie) versteht sich Gesellschaft heute?" Symposium des Karlsruher Forums für Kultur, Recht und Technik e.V. (2025), Karlsruhe, Germany, October 9, 2025

(Generative) KI - Herausforderungen

2025, March 6. Stab und Strategie (2025), Karlsruher Institut für Technologie, March 6, 2025

Erfahrungen mit Elicit als Recherchetool

2025. Treffen der NTA-AG Generative KI und TA (2025), Online, June 24, 2025

Wahrheit unter falschem Gesicht: Deepfakes auf der Spur

2025. Johannisnacht der Evangelischen Akademie Frankfurt (2025), Frankfurt am Main, Germany, June 28, 2025

KI-Textgeneratoren als soziotechnisches Phänomen – Ansätze zur Folgenabschätzung und Regulierung

2024. KI:Text – Diskurse über KI-Textgeneratoren. Ed.: G. Schreiber, 341–354, De Gruyter. doi:10.1515/9783111351490-021

The trustification of AI. Disclosing the bridging pillars that tie trust and AI together

2024. Big Data & Society, 11 (2). doi:10.1177/20539517241249430

Künstliche Intelligenz außer Kontrolle?

2024. TATuP - Zeitschrift für Technikfolgenabschätzung in Theorie und Praxis, 33 (1), 64–67. doi:10.14512/tatup.33.1.64

What do algorithms explain? The issue of the goals and capabilities of Explainable Artificial Intelligence (XAI)

2024. Humanities and Social Sciences Communications, 11 (1), Art.-Nr.: 760. doi:10.1057/s41599-024-03277-x

TA for human security: Aligning security cultures with human security in AI innovation

2024. TATuP - Zeitschrift für Technikfolgenabschätzung in Theorie und Praxis, 33 (2), 16–21. doi:10.14512/tatup.33.2.16

Ask Me Anything! How ChatGPT Got Hyped Into Being

2024. Center for Open Science (COS). doi:10.31235/osf.io/jzde2

Technikfolgenabschätzung (TA) & ihr Verhältnis zur Antizipierbarkeit von Zukünften

2024. Ethik des Human Enhancement - am Beispiel Neurotech. Pilot Modul "Technikfolgenabschätzung, Nachhaltigkeit und Resposible Innovation", Studiengang Human-Centered Computing, FH Hagenberg (2024), Hagenberg im Mühlkreis, Austria, November 16, 2024

"Talking ‘bout methods": Reflecting choices, pathways and limitations

2024. KLIREC (Klima, Ressourcen und Circular Economy) Colloquium on Research Design and Methods "Kooperatives Promotionskolleg KLIREC" (2024), Pforzheim, Germany, June 10, 2024

Vorausschauende Praktiken (Anticipatory practices) in der Energiewende als sozio-technische Transformation: Konzeptionelle Verflechtungen von Vertrauen, KI-/ML-basierten Praktiken & Flexibilität

2024. Fachsymposium ITAS-BAuA “KI in der Arbeitswelt: Systematiken zur Gestaltung und Technikfolgenabschätzungen“ (2024), Dresden, Germany, October 24–25, 2024

Closing the gap between acceptance and acceptability? Conceptualising the role of trust via the example of the energy transition as socio-technical transformation

2024. European Association for the Study of Science and Technology and the Society for Social Studies of Science: Conference Making and Doing Transformations (EASST/4S 2024), Amsterdam, Netherlands, July 16–19, 2024

Anticipatory Practices within the Development of Smart Grids: Conceptual Entanglements of Trust, AI, & Flexibility

2024. Interdisciplinary International Graduate Summer School "The transformation challenge: Re-Thinking cultures of research" (2024 2024), San Sebastian, Spain, July 1–5, 2024

In den Prozess vertrauen? Konzeptionelle Verflechtungen von künstlicher Intelligenz, Flexibilität und Vertrauen in der Infrastrukturtransformation des Energienetzes als sozio-technisches System

2024. Tagung des DGS-Arbeitskreises „Soziologie der Nachhaltigkeit“ (SONA) und der DGS-Sektion Umwelt- und Nachhaltigkeitssoziologie (2024), Universität Stuttgart, June 6–7, 2024

Anticipatory Practices within the Development of Smart Grids: Conceptual Entanglements of Trust, Artificial Intelligence, and Flexibility

2024. The EU-SPRI Forum Annual Conference "Governing technology, research, and innovation for better worlds" (2024), Enschede, Netherlands, June 5–7, 2024

Trust (in) the process? Accounting for trust dimensions within urgent socio-technical transformation processes as a challenge for both policymakers and transdisciplinary TA

2024. 11. Konferenz des Netzwerks Technikfolgenabschätzung "Politikberatungskompetenzen heute" (NTA 2024), Berlin, Germany, November 18–20, 2024

Hype: opportunist appropriation or neglected future concept?

2024. GVLS Group Open Discussion Session (2024), Karlsruhe, Germany, November 26, 2024

Dimensionen des Vertrauens bei algorithmischen Systemen

2024, September 30. Dimensionen des Vertrauens bei algorithmischen Systemen, Zentrum verantwortungsbewusste Digitalisierung (ZEVEDI 2024), Frankfurt am Main, Germany, September 30, 2024

Generative KI für TA

2024. 11. Konferenz des Netzwerks Technikfolgenabschätzung "Politikberatungskompetenzen heute" (NTA 2024), Berlin, Germany, November 18–20, 2024

Nicht in der Welt: Benötigen LLMs Erfahrungswissen?

2024. BAUA (2024), Dresden, Germany, October 24–25, 2024

Towards a Pragmatical Grounding of Large Language Models

2024. Forum on Philosophy, Engineering, and Technology (fPET 2024), Karlsruhe, Germany, September 17–19, 2024

Generative KI in der Wissenschaft

2024, October 28. Weiterbildungsprogramm "Künstliche Intelligenz in der Wissenschaft" der Potsdam Graduate School (2024), Potsdam, Germany, October 28, 2024

Künstliche Intelligenz und die Tücken der Anthropomorphisierung

2024. Führungsakademie Baden-Württemberg (2024), Karlsruhe, Germany, September 26, 2024

Gesellschaftliche Folgen künstlicher Intelligenz

2024. Europäischer AI Act : Vortrag und Diskussion zu Chancen, Risiken und Europarecht (2024), Karlsruhe, Germany, March 15, 2024

KI-generierte Inhalte als gesellschaftliche Herausforderung

2024. Zwischen Risiko und Sicherheit: Den digitalen Wandel gestalten (2024), Karlsruhe, Germany, February 15, 2024

Die KI-Verordnung – Zwei Meinungen zu den Anforderungen für und Fragen an unsere künftige Forschung

2024. Fachsymposium ITAS-BAuA “KI in der Arbeitswelt: Systematiken zur Gestaltung und Technikfolgenabschätzungen“ (2024), Dresden, Germany, October 24–25, 2024

Wie KI-generierte Desinformation unsere Gesellschaft gefährdet

2024, October 22. Treffen des Arbeitskreises KI der IHK bei Vision Tools Bildanalyse Systeme GmbH (2024), Waghäusel, Germany, October 22, 2024

Deepfakes und Demokratie

2024, October 8. E-Learning Kurs Digitale Ethik, Landeszentrale für Politische Bildung (2024), Online, October 8, 2024

Generative künstliche Intelligenz - warum betrifft das unsere Demokratie?

2024, October 2. Lange Nacht der Demokratie (2024), Starnberg, Germany, October 2, 2024

Gefährdet KI-generierte Desinformation unsere Demokratie?

2024. Wie politisch sind ChatGPT & Co.? (2024), Online, January 25–26, 2024

Generative KI als Instrument in der Politikberatung – Bündelung von Erfahrungen und Reflexion aus der Praxis

2024. 11. Konferenz des Netzwerks Technikfolgenabschätzung "Politikberatungskompetenzen heute" (NTA 2024), Berlin, Germany, November 18–20, 2024

Generative KI in der Politikberatung – brauchen wir spezifische Leitlinien?

2024. TA24 - Methoden für die Technikfolgenabschätzung : im Spannungsfeld zwischen bewährter Praxis und neuen Möglichkeiten (2024), Vienna, Austria, June 3–4, 2024

Technology hypes: Practices, approaches and assessments

2023. TATuP - Zeitschrift für Technikfolgenabschätzung in Theorie und Praxis, 32 (3), 11–16. doi:10.14512/tatup.32.3.11

Digitalisierung, Theologie und Technikfolgenabschätzung: Interview mit Gernot Meier

2023. TATuP - Zeitschrift für Technikfolgenabschätzung in Theorie und Praxis, 32 (2), 58–61. doi:10.14512/tatup.32.2.58

Technology hype : Dealing with bold expectations and overpromising = Technologie-Hype: Der Umgang mit überzogenen Erwartungen und Versprechungen

2023. Oekom Verlag

Einige ethische Implikationen großer Sprachmodelle

2023. Karlsruher Institut für Technologie (KIT). doi:10.5445/IR/1000158914

How governance fails to comprehend trustworthy AI

2023. 6th International Conference on Public Policy (ICPP6 - Toronto 2023 2023), Online, June 27–29, 2023

Trustworthy AI and its regulatory discontents

2023. Austrian Institute of Technology - AI Ethics Lab (2023), Online, March 23, 2023

The Torn Regulatory State: Governing Trustworthy AI in a Contested Field

2023. Deutsches Zentrum für Luft- und Raumfahrt (DLR 2022), Bremerhaven, Germany, January 19, 2023

Unkontrollierbare künstliche Intelligenz – ein existenzielles Risiko?

2023. Montagskonferenz des Instituts für Übersetzen und Dolmetschen (2023), Heidelberg, Germany, November 23, 2023

Künstliche Intelligenz - Einführung

2023, October 4. Volkshochschule (2023), Munich, Germany, October 4, 2023

ChatGPT und die Folgen

2023. ChatGPT & Co: Kluge Nutzung für den Erfolg im Business und im Alltag (2023), Online, July 18, 2023

Einige ethische Implikationen großer Sprachmodelle

2023. Sondersitzung des HND-BW Lenkungskreises und HND-BW Expert*innenkreises "Standortbestimmung: Auswirkungen von ChatGPT auf Lehre und Studium" (2023), Online, April 25, 2023

ChatGPT und andere Computermodelle zur Sprachverarbeitung – Grundlagen, Anwendungspotenziale und mögliche Auswirkungen aus dem TAB Hintergrundpapier

2023. QFC - Qualifizierungsförderwerk Chemie GmbH (2023), Online, December 7, 2023

Deepfakes betreffen uns alle!

2023, November 23. Podiumsveranstaltung aus der Reihe #digitalk (2023), Karlsruhe, November 23, 2023

Die Gefahr von KI für die Demokratie

2023, October 31. Bremer Gesellschaft zu Freiburg im Breisgau (2023), Freiburg, Germany, October 31, 2023

Chat GPT und der Tanz um die künstliche Intelligenz

2023. Podiumsdiskussion : CSR-Circle und HR-Circle (2023), Online, May 3, 2023

Deepfakes und ChatGPT: Von generierten Gesichtern und stochastischen Papageien

2023. ITA-Seminar der Österreichischen Akademie der Wissenschaften (ÖAW) (2023), Vienna, Austria, May 2, 2023

Deepfakes: A growing threat to the EU institutions’ security

2023. Interinstitutional Security and Safety Days (2023), Online, March 2, 2023

Talking AI into Being: The Narratives and Imaginaries of National AI Strategies and Their Performative Politics

2022. Science, technology, & human values, 47 (5), 855–881. doi:10.1177/01622439211030007

Trustworthy AI. What does it actually mean?

2022. WTMC PhD Spring Workshop "Trust and Truth" (2022), Deursen-Dennenburg, Netherlands, April 6–8, 2022

Two layers of trust: Discussing the relationship of an ethical and a regulatory dimension towards trustworthy AI

2022. Trust in Information (TIIN) Research Group at the Höchstleistungsrechenzentrum (HLRS) Stuttgart (2022), Stuttgart, Germany, February 16, 2022

Trust (erosion) in AI regulation. Dimensions, Drivers, Contradictions?

2022. 20th Annual STS Conference : Critical Issues in Science, Technology and Society Studies (2022), Graz, Austria, May 2–4, 2022

"I’m sorry Dave, I’m afraid I can’t do that“ – Von Vertrauen, Verlässlichkeit und babylonischer Sprachverwirrung

2022. 37. AIK-Symposium "Vertrauenswürdige Künstliche Intelligenz" (2022), Karlsruhe, Germany, October 28, 2022

Erklären und Vertrauen im Kontext von KI

2022. Fachsymposium ITAS-BAuA "Digitalisierung in der Arbeitswelt: Konzepte, Methoden und Gestaltungsbedarf" (2022), Karlsruhe, Germany, November 2–3, 2022

Künstliche Intelligenz - Einführung für die Jahrgangsstufe 11

2022. Theodor-Heuss-Gymnasium (2022), Mühlacker, Germany, October 11, 2022

Künstliche Intelligenz - Einführung für die Jahrgangsstufe 10

2022. Theodor-Heuss-Gymnasium (2022), Mühlacker, Germany, October 11, 2022

Was wir von der KI-Regulierung in der digitalen Kommunikation für den Arbeitskontext lernen können

2022. Fachsymposium ITAS-BAuA (2022), Karlsruhe, Germany, November 3, 2022

KI in der Digitalen Kommunikation

2022. Medien Triennale Südwest "KI & Medien gemeinsam gestalten" (#MTSW 2022), Saarbrücken, Germany, October 12, 2022

Deepfakes & Co: Digital misinformation as a challenge for democratic societies

2022. 5th European Technology Assessment Conference "Digital Future(s). TA in and for a Changing World" (ETAC 2022), Karlsruhe, Germany, July 25–27, 2022

Über die Folgen von perfekten Täuschungen und die Herausforderungen für eine multidimensionale Regulierung

2022. "Wie viel Wahrheit vertragen wir?" - Ringvorlesung / Universität Köln (2022), Online, June 28, 2022

Deepfakes: die Kehrseite der Kommunikationsfreiheit

2022. Gastvortrag Universität Graz, Siebente Fakultät (2022), Online, June 1, 2022

Künstliche Intelligenz/Maschinelles Lernen

2021. Handbuch Technikethik. Hrsg.: A. Grunwald, 424–428, J.B. Metzler. doi:10.1007/978-3-476-04901-8_81

Trust (erosion) in AI regulation : Dimensions, Drivers, Contradictions?

2021. International Lecture Series by Fudan University: Trust and AI (2021), Online, December 14, 2021

Zwischen Agenda, Zwang und Widerspruch. Der liberale Staat und der Fall KI

2021. NTA9-TA21: Digital, Direkt, Demokratisch? Technikfolgenabschätzung und die Zukunft der Demokratie (2021), Online, May 10–12, 2021

Collecting Data, Tracing & Tracking

2021. Big Data-Hype: Aus den Augen, aus dem Sinn? „Das Öl des 21. Jahrhunderts“: Früh wieder still und zur Selbstverständlichkeit geworden ("Deep Dive" Online-Experten-Roundtable 2021), Cologne, Germany, March 10, 2021

Herausforderungen bei der Regulierung von Deepfakes

2021. Fachkonferenz „Vertrauen im Zeitalter KI-gestützter Bots und Fakes: Herausforderungen und mögliche Lösungen“ (2021), Online, November 4, 2021

Fakten, Fakes, Deep fakes? Die Rolle von KI in der öffentlichen Meinungsbildung

2021. KIT-Alumni Talk (2021), Online, November 16, 2021

Technikfolgenabschätzung für eine digitale Arbeitswelt

2021. Zukunftsforum Schweinfurt : Robotik und digitale Produktion (2021), Schweinfurt, Germany, June 7, 2021

Evaluating the Effect of XAI on Understanding of Machine Learning Models

2021. Philosophy of Science meets Machine Learning (2021), Tübingen, Germany, November 9–12, 2021

Militärische KI: Sprachspiele der Autonomie und ihre politischen Folgen [Military AI: Language games of autonomy and their political consequences]

2020. Philosophie der KI - Darmstädter Workshop (2020), Darmstadt, Germany, February 21, 2020

Sociotechnical weapons: AI myths as national power play

2020. Locating and Timing Matters: Significance and agency of STS in emerging worlds (EASST/4S 2020), Online, August 18–21, 2020

Contact

Karlsruhe Institute of Technology (KIT)

Institute for Technology Assessment and Systems Analysis (ITAS)

P.O. Box 3640

76021 Karlsruhe

Germany

Tel.: +49 721 608-26815

E-mail